Last week, Dwarkesh Patel put words to an uneasy feeling that resonated with me:

I think we’re at what late February 2020 was for Covid, but for AI.

If you can remember back to February 2020, both the media and the general public were still in normal-times mode, discussing Trump’s impeachment, the Democratic primaries and Harvey Weinstein. Epidemiologists recognized that something big and potentially unprecedented was coming, but the news hadn’t yet broken through.

One of the first front-page articles I can find in the NY Times about Covid is from February 22nd, 2020.

Just three weeks later, markets had crashed and schools were closing. The world was upended. Covid had become the context for everything.

Patel foresees a similar pattern with AI:

Every single world leader, every single CEO, every single institution, members of the general public are going to realize pretty soon that the main thing we as a world are dealing with is Covid, or in this case, AI.

By “pretty soon,” I don’t think Patel believes we’re three weeks away from global upheaval. But the timeframes are much shorter than commonly believed — and getting shorter month by month.

Wait, what? And why?

This post is meant to be an explainer for friends and readers who haven’t been paying close attention to what’s been happening in AI. Which is okay! Technology is full of hype and bullshit, which most people should happily ignore.

We’ve seen countless examples of Next Big Things ultimately revealed to be nothing burgers. Many of the promises and perils of AI could meet a similar fate. Patel himself is putting together a media venture focused on AI, so of course he’s going to frame the issue as existential. Wherever there’s billions of dollars being spent, there’s hype and hyperbole, predictions and polemics.

Still — much like with epidemiologists and Covid in February 2020, the folks who deal with AI for a living are pretty sure something big is coming, and sooner than expected.

Something big doesn’t necessarily mean catastrophic; the Covid analogy only goes so far. Indeed, some researchers see AI ushering in a golden age of scientific enlightenment and economic bounty. Others are more pessimistic — realistic, I’d say — warning that we’re in for a bumpy and unpredictable ride, one that’s going to be playing out in a lot of upcoming headlines.

The sky isn’t falling — but it’s worth directing your gaze upwards.

The world of tomorrow, today

Science fiction is becoming science fact much faster than almost anyone anticipated. One way to track this is to ask interested parties how many years it will be before we have artificial general intelligence (AGI) capable of doing most human tasks. In 2020, the average estimate was around 50 years. By the end of 2023, it was seven.

Over the past few months, a common prediction has become three years. That’s the end of 2027. Exactly how much AI progress we’ll see by then has become the subject of a recent bet. Of the ten evaluation criteria for the bet, one hits particularly close to home for me:

8) With little or no human involvement, [AI will be able to] write Oscar-caliber screenplays.

As a professional screenwriter and Academy voter, I can’t give you precise delimiters for “Oscar-caliber” versus “pretty good” screenplays. But the larger point is that AI should be able to generate text that feels original, compelling and emotionally honest, both beat-by-beat and over the course of 120 satisfying pages. Very few humans can do that, so will an AI be able to?

A lot of researchers say yes, and by the end of 2027.

I’m skeptical — but that may be a combination of ego preservation and goalpost-moving. It’s not art without struggle, et cetera.

The fact that we’ve moved from the theoretical (“Could AI generate a plausible screenplay?”) to practical (“Should an AI-generated screenplay be eligible for an Oscar?”) in two years is indicative of just how fast things are moving.

So what happened? Basically, AI got smarter much faster than expected.

Warp speed

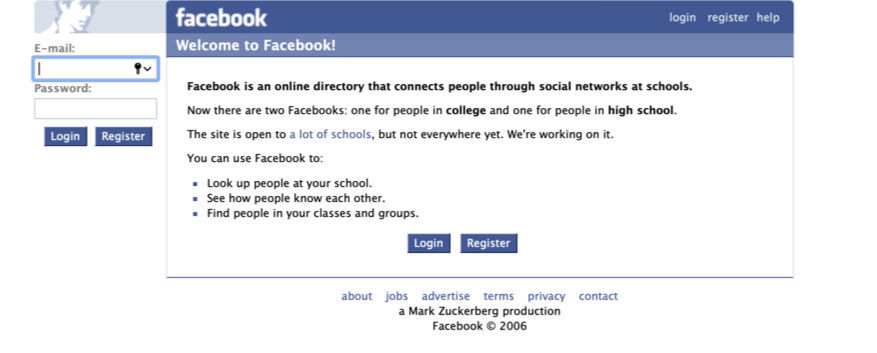

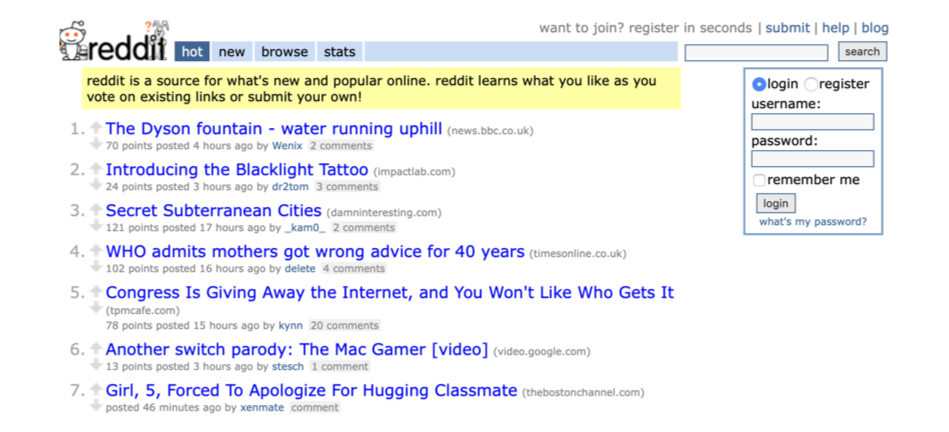

Some of the acceleration is easy to notice. When large language models (LLMs) like ChatGPT debuted at the end of 2022, they felt like a novelty. They generated text and images, but nothing particularly useful, and they frequently “hallucinated,” a polite way of saying made shit up.

If you shrugged and moved on, I get it.

The quality of LLM’s output has improved a lot over the past two years, to the point that real professionals are using them daily. Even in their current state — even if they never get any better — LLMs can disrupt a lot of work, for better and for worse.

An example: Over the holidays, I built two little iOS apps using Cursor, which generates code from plain text using an LLM.

Here’s what I told it as I was starting one app:

I’ll be attaching screen shots to show you what I’m describing.

- Main screen is the starting screen upon launching the app. There will be a background image, but you can ignore that for now. There are three buttons. New Game, How to Play, and Credits.

How to Play is reached through the How to Play button on the main screen. The text for that scrolling view is the file in the project how-to-play.txt.

New Game screen is reached through the new game button. It has two pop-up lists. the first chooses from 3 to 20. the second from 1 to 10. Clicking Start takes you into the game. (In the game view, the top-right field should show the players times round, so if you had 3 players and five rounds, it would start with 1/15, then 2/15.

the Setup screen is linked to from the game screen, if they need to make adjustments or restart/quit the game.

Within seconds, it had generated an app I could build and run in Xcode. It’s now installed on my phone. It’s not a commercial app anyone will ever buy, but if it were, this would be a decent prototype.

Using Cursor feels like magic. I’m barely a programmer, but in the hands of someone who knew what they were doing, it’s easy to imagine technology like this tripling their productivity. ((Google’s CEO says that more than 25% of their code is already being generated by AI.)) That’s great for the software engineer — unless the company paying them decides they don’t need triple the productivity and will instead just hire one-third the engineers.

The same calculation can be applied to nearly any industry involving knowledge work. If your job can be made more productive by AI, your position is potentially in jeopardy.

That LLMs are getting better at doing actually useful things is notable, but that’s not the main reason timelines are shortening.

Let’s see how clever you really are

To measure how powerful a given AI system is, you need to establish some benchmarks. Existing LLMs easily pass the SAT, the GRE, and most professional certification exams. So researchers must come up with harder and harder questions, ones that won’t be in the model’s training set.

No matter how high you set the bar, the newest systems keep jumping over it. Month after month, each new model does a little better. Then, right before the holidays, OpenAI announced that its o3 system made a huge and unexpected leap:

With LLMs like ChatGPT or Claude, we’re used to getting fast and cheap answers. They spit out a text or image in seconds. In contrast, o3 spends considerably more time (and computing power) planning and assessing. It’s a significant change in the paradigm. The o3 approach is slower and more expensive — potentially thousands of dollars per query versus mere pennies — but the results for certain types of questions are dramatically better. For billion-dollar companies, it’s worth it.

Systems like these are particularly good at solving difficult math and computer science problems. And since AI systems themselves are based on math and computer science, today’s model will help build the next generation. This virtuous cycle is a significant reason the timelines keep getting shorter. AI is getting more powerful because AI is getting more powerful.

When and why this will become the major story

In 2020, Covid wasn’t on the front page of the NY Times until its economic and societal impacts were unmistakable. The stock market tanked; hospitals were filling up. Covid became impossible to ignore. Patel’s prediction is the same thing will happen with AI. I agree.

I can imagine many scenarios bringing AI to the front page, none of which involve a robot uprising.

Here are a few topics I expect we’ll see in the headlines over the next three years.

- Global tensions. As with nuclear technology during the Cold War, big nations worry about falling behind. China has caps on the number of high-performance AI chips it’s allowed to import. Those chips it needs? They’re made in Taiwan. Gulp.

Espionage. Corporations spend billions training their models. ((DeepSeek, a Chinese firm, apparently trained their latest LLM for just $6 million, an impressive feat if true.)) Those model weights are incredibly valuable, both to competitors and bad actors.

Alignment. This is a term of art for “making sure the AI doesn’t kill us,” and is a major source of concern for professionals working in the field. How do you teach AI to act responsibly, and how do you know it’s not just faking it? AI safety is currently the responsibility of corporations racing to be the first to market. Not ideal!

Nationalizing AI. For all three of the reasons above, a nation (say, the U.S.) might decide that it’s a security risk to allow such powerful technology to be controlled by anyone but the government.

Spectacular bankruptcy. Several of these companies have massive valuations and questionable governance. It seems likely one or more will fail, which will lead to questions about the worth of the whole AI industry.

The economy. The stock market could skyrocket — or tank. Many economists believe AI will lead to productivity gains that will increase GDP, but also, people work jobs to earn money and buy things? That seems important.

Labor unrest. Unemployment is one thing, but what happens when entire professions are no longer viable? What’s the point in retraining for a different job if AI could do that one too?

Breakthroughs in science and medicine. Once you have one AI as smart as a Nobel prize winner, you can spin up one million of them to work in parallel. New drugs? Miracle cures? Revolutionary technology, like fusion power and quantum computing? Everything seems possible.

Environmental impact (bad). When you see articles about the carbon footprint of LLMs, they’re talking about initial training stage. That’s the energy intensive step, but also way smaller than you may be expecting? After that, the carbon impact of each individual query is negligible, on the order of watching a YouTube video. That said, the techniques powering systems like o3 involve using more power to deliver answers, which is why you see Microsoft and others talking about recommissioning nuclear plants. Also, e-waste! All those outdated chips need to be recycled.

Environmental impact (good). AI systems excel at science, engineering, and anything involving patterns. Last month, Google’s DeepMind pushed weather forecasting from 10 days to 15 days. Work like this could help us deal with effects of climate change, by improving crop yields and the energy grid, for example.

So how freaked out should you be?

What is an ordinary person supposed to do with the knowledge that the world could suddenly change?

My best advice is to hold onto your assumptions about the future loosely. Make plans. Live your life. Pay attention to what’s happening, but don’t let it dominate your decision-making. Don’t let uncertainty paralyze you.

A healthy dose of skepticism is warranted. But denial isn’t. I still hear smart colleagues dismissing AI as fancy autocomplete. Sure, fine — but if it can autocomplete a diagnosis more accurately than a trained doctor, we should pay attention.

It’s reasonable to assume that 2027 will look a lot like 2024. We’ll still have politics and memes and misbehaving celebrities. It’ll be different from today in ways we can’t fully predict. The future, as always, will remain confusing, confounding and unevenly distributed.

Just like the actual pandemic wasn’t quite Contagion or Outbreak, the arrival of stronger AI won’t closely resemble Her or The Terminator or Leave the World Behind. Rather, it’ll be its own movie of some unspecified genre.

Which hopefully won’t be written by an AI. We’ll see.

Thanks to Drew, Nima and other friends for reading an early draft of this post.